/ ABOUT

OK to Touch!? is an interactive experience to bring into the spotlight the inconspicuous tracking technology and make it visible to the users through interactions with everyday objects. The concept uses the first-hand experience to convey how users’ private data could be tracked, without their consent, in the digital future.

/ DESCRIPTION

A variety of popular scripts are invisibly embedded on many web pages, harvesting a snapshot of your computer’s configuration to build a digital fingerprint that can be used to track you across the web, even if you clear your cookies. It is only a matter of time when these tracking technology takes over the ‘Internet of Things’ we are starting to surround ourselves with. From billboards to books and from cars to coffeemakers, physical computing and smart devices are becoming more ubiquitous than we can fathom.

As users of smart devices, the very first click or touch with the smart object, signs-us-up to be tracked online and be another data point in web of ‘Device’ Fingerprinting, with no conspicuous privacy policies and no apparent warnings. With this interactive experience, the designers are attempting to ask the question:

How might we create awareness about the invisible data tracking methods as the ‘Internet of Things’ expands into our everyday objects of use?

/ OK to Touch!?

CLASSROOM PROJECT

OCAD University

Mentors: Kate Hartman & Nick Puckett

TEAM

Manisha Laroia

Priya Bandodkar

DURATION

2 weeks | 2019

EXPERIMENTS WITH CODE

Code | Computer Vision | p5js

Exhibited at the OCADU Digital Futures OPEN SHOW 2019.

/ BACKGROUND

The effects of Data breach for political, marketing and technological practices is evident with the Facebook–Cambridge Analytica data scandal, Aadhaar login breach and Google Plus exposed the data of 500,000 people, then 52.5 million to name a few. When the topic of Data Privacy is brought up in discussion circles, some get agitated about their freedom being affected, some take the fifth and some say that, ‘we have nothing to hide.’ Data privacy is not about hiding but about being ethical. A lot of the data that is shared across the web is often used by select corporations to make profits at the cost of the individual’s Digital Labour, that is why no free software is free but all comes at a cost, the cost of your labour of using it and allowing for the data generated to be used.

Most people tend to not know what is running in the background of the webpages they hop onto or the voice interaction they have with their devices, and if they don’t see it they don’t believe it. With more and more conversation happening around Data Privacy and ethical design, we believed that it would help if we could make this invisible background data transmission visible to the user and initiate a discourse.

/ INSPIRATION

The core inspiration of the project comes from Mozilla' Building a Healthier Internet initiative which I had been following in their blog and their podcast IRL: Online Life is Real Life. The blog article This is Your Digital Fingerprint talks about 'the quantified self movement (or “lifelogging”) and the Internet of Things initiatives that expand data collection beyond our web activity and into our physical lives by creating a network of connected appliances and devices, which, if current circumstances persist, probably have their own trackable fingerprints.'

Using creative code overlayed on real-images was inspired by The Touch Project. The designers of the Touch project— which explores near-field communication (NFC), or close-range wireless connections between devices—set out to make the immaterial visible, specifically one such technology, radio-frequency identification (RFID), currently used for financial transactions, transportation, and tracking anything from live animals to library books. “Many aspects of RFID interaction are fundamentally invisible,” explains Timo Arnall from the project.

Paper phone was another project that takes the virtual experience into the physical and address the blurred lines between the two, which we have attempted with our project too while talking about everyday objects been sources of privacy invasion. These sources helped define the question we were asking and inspired us to show the connection between the physical and digital to make the invisible, visible and tangible to accept.

/ THE PROCESS

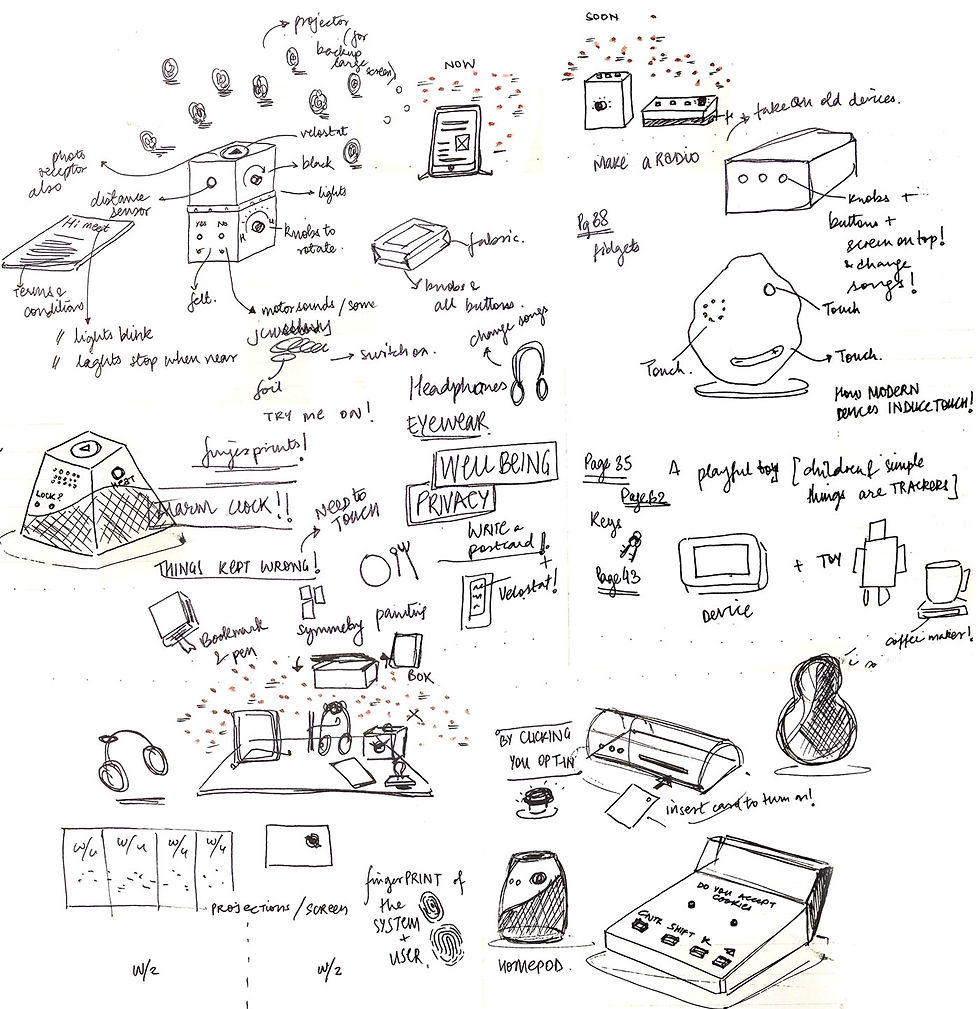

The interactive experience was inspired by the ‘How Might We’ question we raised post our research on Data privacy and we began sketching out the details of the interaction;

-

Which interactions we wanted- touch, sound, voice or tapping into user-behaviour?

-

What tangible objects we should use, – daily objects or a new product which incorporated affordance to interact with or digital products like mobile phones, laptops.

-

Which programming platform to use, &

-

How the setup and user-experience

would be?

While proposing the project we intended to make tangible interactions using Arduino, embedded in desk objects and using Processing with it to create visuals that would illustrate the data tracking. We wanted the interactions to be seamless and the setup to look as normal, intuitive and inconspicuous that would reflect the hidden, creepy nature of Data tracking techniques. Here is the initial setup we had planned to design:

/ THE PROCESS

Interestingly, in our early proposal discussion, we raised the concerns of having too many wires in the display if we use Arduino and our mentors proposed we look at the ml5 library with p5js; a machine learning library that works with p5js to be able to recognize objects using computer vision. We attempted the YOLO library of ml5 and iterated with the code trying to recognize objects like remotes, mobile phones, pens, or books. The challenge with this particular code was in trying to create the visuals we wanted to

accompanied with each object that is recognized, to be able to track multiple interactions and to be able to overlay the video that is being captured with computer vision. It was very exciting for us to use this library as we had to not depend on hardware interactions and we could use a setup with no wires, no visible digital interactions and create a mundane setup which could then bring in the surprise of the tracking visuals aiding the concept.

In using the ml5 library we also came across the openCV libraries that work with processing and p5js and iterated with it to use the pixel change or frame difference function. We created overlay visuals on the video capture and also without the visual capture thus creating a data tracking map of sorts. Eventually we use the optical flow library example and built the final visual output on it. To input data we used a webcam and captured the video feed for running through p5js.

/CHALLENGES & LEARNINGS

Our biggest learning was in the process of prototyping and creating setups to user test and understand the nuances of creating an engaging experience.

The final setup, was to have a webcam on the top to track any interaction that happens with the products on the table and the input video feed would be processed to then give a digital output of data tracking visualizations. For the output we tried various combinations like using a projector to throw visuals as the user interacted with the objects, use an LCD display

to overlay the visual on the video feed or use a screen display in the background to form a map of the data points collected through the interaction. The top projection was something we felt would be a very interesting output method as we will be able to throw a projection on the products as the user interacted with them, creating the visual layer of awareness we wanted. Unfortunately, each time we had top projection the computer vision code would get a lot of visual noise as each projection was being added to the video capture as an input

and a loop of feeds would generate creating visual noise and multiple projections which were unnecessary as part of the discrete experience we wanted to create. Projections looked best in dark spaces but that would compromise with the effective of the webcam as computer vision was the backbone of the working of the project. Eventually we used a LCD screen and a webcam top mounted.

/ CHOICE OF AESTHETICS

The final video feed with the data tracking visual we collected was looking more like an infographic and subtle in nature as compared to the strange and surveillance experience that we wanted to create. So we decided to use a video filter to add that additional visual layer on the video capture to show that it has undergone some processing and is being watched or tracked.

The video was displayed on a large screen which was placed adjacent to a mundane, desk with the typical desk objects like books, lamps, plants, stationery, stamps, cup & blocks.

Having a bare webcam during the critique made it evident for the user’s about the kind of interaction and learning from that we hid the webcam in a paper lamp in the final setup. This added another cryptic layer to the interaction adding to the concept.

These objects were chosen and displayed in a way so as to create desk workspace where people could come sit and start interaction with the objects through the affordances created. Affordances were created using, semi-opened books, bookmarks inside books, open notepad with stamps and ink-pads, a small semi-opened wooden box, a half filled cup of tea with a tea bag, wooden block, stationery objects, a

magnifying glass, all to hint at a simple desk which could probably be a smart desk that was tracking each move of the user and transmitting data without consent on every interaction made with the objects. The webcam was hung over the table and discreetly covered by a paper lamp to add to the everyday-ness of the desk setup.

Each time a user interacted with the setup, the webcam would track the motion and the changes in the pixel field and generate data capturing visuals to indicate and spark in the user, that something strange was happening making them question, if it was Ok to Touch!?

The Project code is available on Github.

/REFERENCES

Briz, Nick. “This Is Your Digital Fingerprint.” Internet Citizen, 26 July 2018, www.blog.mozilla.org/internetcitizen/2018/07/26/this-is-your-digital-fingerprint/.

Chen, Brian X. “’Fingerprinting’ to Track Us Online Is on the Rise. Here’s What to Do.” The New York Times, The New York Times, 3 July 2019, www.nytimes.com/2019/07/03/technology/personaltech/fingerprinting-track-devices-what-to-do.html.

Groote, Tim. “Triangles Camera.” OpenProcessing, www.openprocessing.org/sketch/479114

Grothaus, Michael. “How our data got hacked, scandalized, and abused in 2018”. FastCompany. 13 December 2018.

www.fastcompany.com/90272858/how-our-data-got-hacked-scandalized-and-abused-in-2018

Hall, Rachel. Chapter 7, Terror and the Female Grotesque: Introducing Full-Body Scanners to the U.S. Airports pp. 127-149 In Eds. Rachel E. Dubrofsky and Shoshana Amielle Maynet, Feminist Surveillance Studies. Durham: Duke University Press, 2015.

Khan, Arif. “Data as Labor” Singularity Net. Medium, 19 November 2018

blog.singularitynet.io/data-as-labour-cfed2e2dc0d4

Szymielewicz, Katarzyna, and Bill Budington. “The GDPR and Browser Fingerprinting: How It Changes the Game for the Sneakiest Web Trackers.” Electronic Frontier Foundation, 21 June 2018, www.eff.org/deeplinks/2018/06/gdpr-and-browser-fingerprinting-how-it-changes-game-sneakiest-web-trackers.

Antonelli, Paola. “Talk to Me: Immaterials: Ghost in the Field.” MoMA, www.moma.org/interactives/exhibitions/2011/talktome/objects/145463/.

Shiffman, Daniel. “Computer Vision: Motion Detection – Processing Tutorial” The Coding Train. Youtube. 6 July 2016. www.youtube.com/watch?v=QLHMtE5XsMs